Math Education with Large Language Models: Peril or Promise?

Abstract

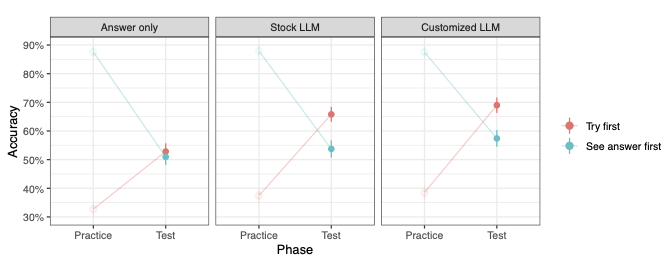

The widespread availability of large language models (LLMs) has provoked both fear and excitement in the domain of education. On one hand, there is the concern that students will offload their coursework to LLMs, limiting what they themselves learn. On the other hand, there is the hope that LLMs might serve as scalable, personalized tutors. Here we conduct a large, pre-registered experiment involving 1200 participants to investigate how exposure to LLM-based explanations affect learning. In the experiment’s learning phase, we gave participants practice problems and manipulated two key factors in a between-participants design: first, whether they were required to attempt a problem before or after seeing the correct answer, and second, whether participants were shown only the answer or were also exposed to an LLM-generated explanation of the answer. Subsequently, all participants were tested on new test questions to assess how well they had learned the underlying concepts. Overall we found that LLM-based explanations positively impacted learning relative to seeing only correct answers. The benefits were largest for those who attempted problems on their own first before consulting LLM explanations, but surprisingly this trend held even for those participants who were exposed to LLM explanations before attempting to solve practice problems on their own. An accompanying qualitative analysis revealed that these boosts in performance were indeed due to participants adopting the strategies they were shown, and that exposure to LLM explanations increased the amount people felt they learned and decreased the perceived difficulty of the test problems.